In modern automated manufacturing environments, robotic vision-guided technology has emerged as a key enabler of precision and flexibility. By equipping robotic arms with "intelligent eyes," this technology empowers them to perceive and analyze complex environments in real time, identifying the position, orientation, and features of target objects. This capability enables accurate execution of tasks such as picking, assembly, inspection, and more across diverse applications, including automotive parts assembly lines, logistics sorting centers, electronics manufacturing, and medical equipment assembly.

This article delves into the operational principles of robotic vision-guided systems, covering the entire process from perception and processing to execution, and reveals how visual data seamlessly translates into precise robotic actions.

1. Perception Phase: Image Acquisition and Preprocessing

Image Acquisition

The perception phase begins with image acquisition, typically achieved using high-resolution industrial cameras (such as CCD or CMOS cameras) paired with specific optical lenses. These cameras are strategically positioned on or near the robotic arm to capture scenes within the work area from pre-determined angles. Lighting design plays a crucial role here, as appropriate illumination enhances contrast between the target and the background, reducing interference from shadows or reflections and ensuring high-quality images.

Image Preprocessing

The raw images captured often contain noise and uneven lighting, which can affect the accuracy of subsequent analysis. The preprocessing stage addresses these issues, preparing the images for further processing. Key preprocessing steps include:

- Denoising: Techniques like median or Gaussian filtering are used to remove random noise.

- Smoothing: Reduces jagged edges to enhance edge detection accuracy.

- Grayscale or Color Space Conversion: Converts color images to grayscale or other suitable color spaces for feature extraction.

- Histogram Equalization: Enhances overall image contrast to make target features more discernible.

2. Processing Phase: Feature Extraction and Object Recognition

Feature Extraction

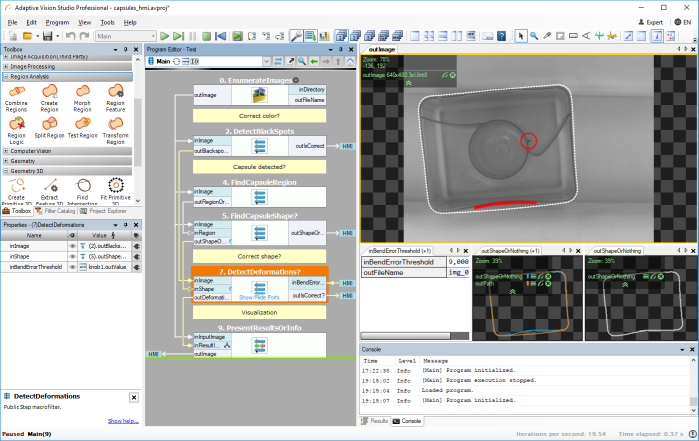

Once preprocessed, the images proceed to feature extraction, where algorithms identify key attributes of the target object, such as edges, contours, textures, colors, and shapes. Modern systems leverage machine learning and deep learning techniques to extract complex and robust features automatically, enabling enhanced adaptability to diverse environments.

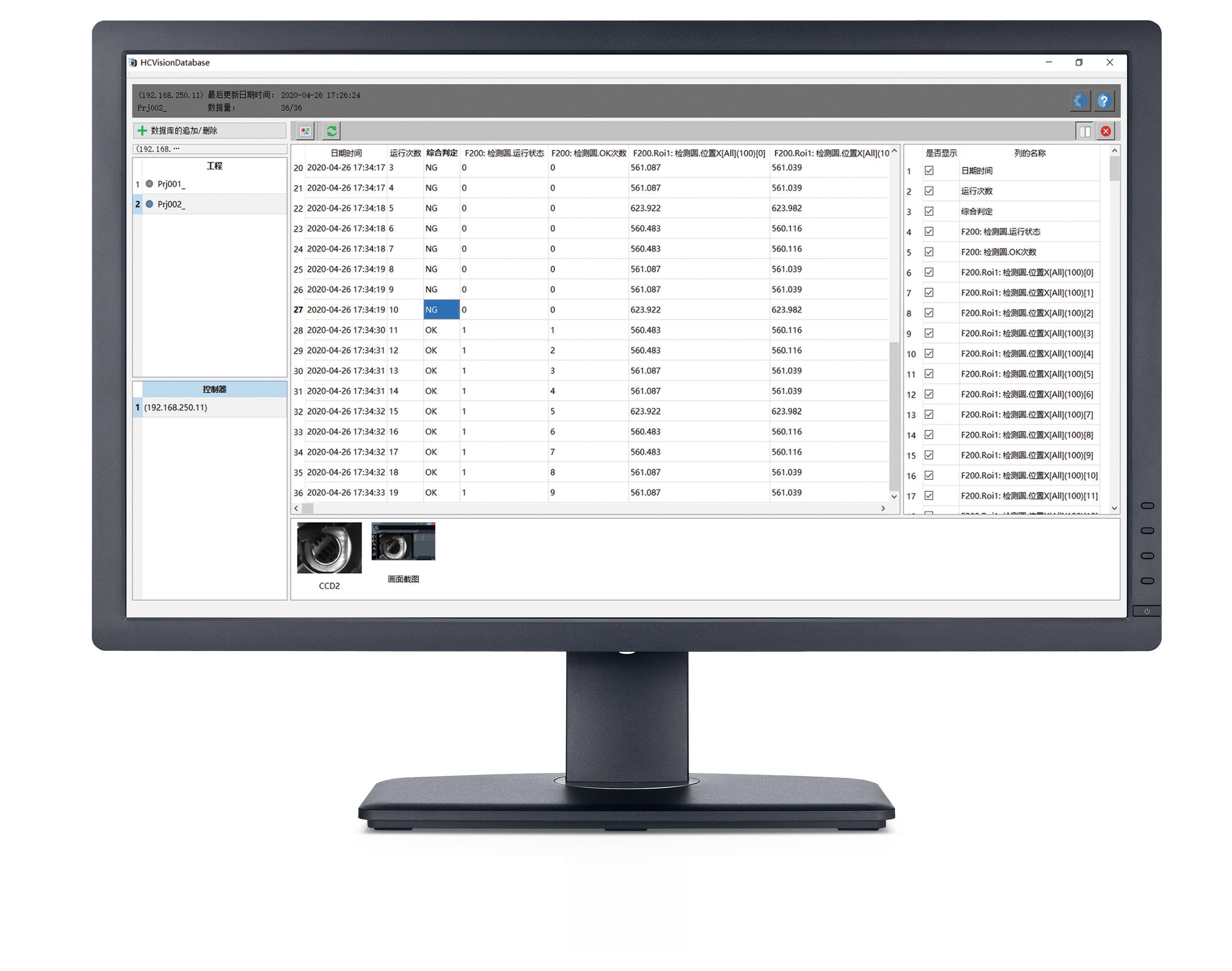

Object Recognition and Localization

With extracted features, the system employs methods like pattern recognition, template matching, machine learning classifiers, or deep neural networks for object recognition. Depending on the task, this may involve single-object detection, multi-object segmentation, or object classification. After identifying the target, the system calculates its precise position (in image coordinates) and orientation, including factors like rotation and scale variations.

3. Hand-Eye Calibration and Coordinate Transformation

Hand-eye calibration is a critical step in vision-guided systems. It establishes the geometric relationship between the camera coordinate system and the robotic arm's base coordinate system. Using calibration algorithms and experiments, the system generates a transformation matrix that converts object location data from the image coordinate system to Cartesian coordinates understandable by the robotic arm.

4. Planning and Execution Phase: Path Planning and Motion Control

Path Planning

Once the target's position is determined in the robotic arm's coordinate system, the system plans an optimal or feasible path to guide the robot to the target point. Path planning takes into account kinematic constraints, collision avoidance, workspace limitations, and potential dynamic obstacles to produce smooth, efficient, and safe trajectories.

Motion Control

The motion control module converts the planned path into specific joint angle commands or Cartesian coordinates for the end effector. These commands are sent to the robotic control system, which adjusts servo motors in real-time to execute precise actions such as picking, moving, placing, or assembling the target object.

5. Closed-Loop Feedback and Adjustment

To address uncertainties during execution, such as positional deviations or changes in object location, advanced vision-guided systems incorporate closed-loop feedback mechanisms. During operation, the system re-acquires images and performs real-time analysis to verify task outcomes. If discrepancies are detected, it makes on-the-fly adjustments, ensuring high accuracy and reliability.

Conclusion

From perception to execution, robotic vision-guided systems achieve seamless translation of visual data into precise mechanical actions through a series of tightly integrated stages, including image acquisition, preprocessing, feature extraction and recognition, hand-eye calibration, path planning, and motion control. These systems significantly enhance the flexibility, precision, and efficiency of automated production lines.

With ongoing advancements in deep learning and artificial intelligence, the capabilities and application domains of vision-guided systems are poised for further expansion, unlocking new possibilities for smarter, more adaptable automation solutions.